Day 2 of My 100-Day DevOps Challenge: Understanding SDLC and Operating System Fundamentals

Introduction

Today's DevOps learning journey centered around two fundamental concepts: the Software Development Life Cycle (SDLC) and operating system architecture. These concepts form the cornerstone of DevOps practices and are essential for understanding how modern software development and deployment work together.

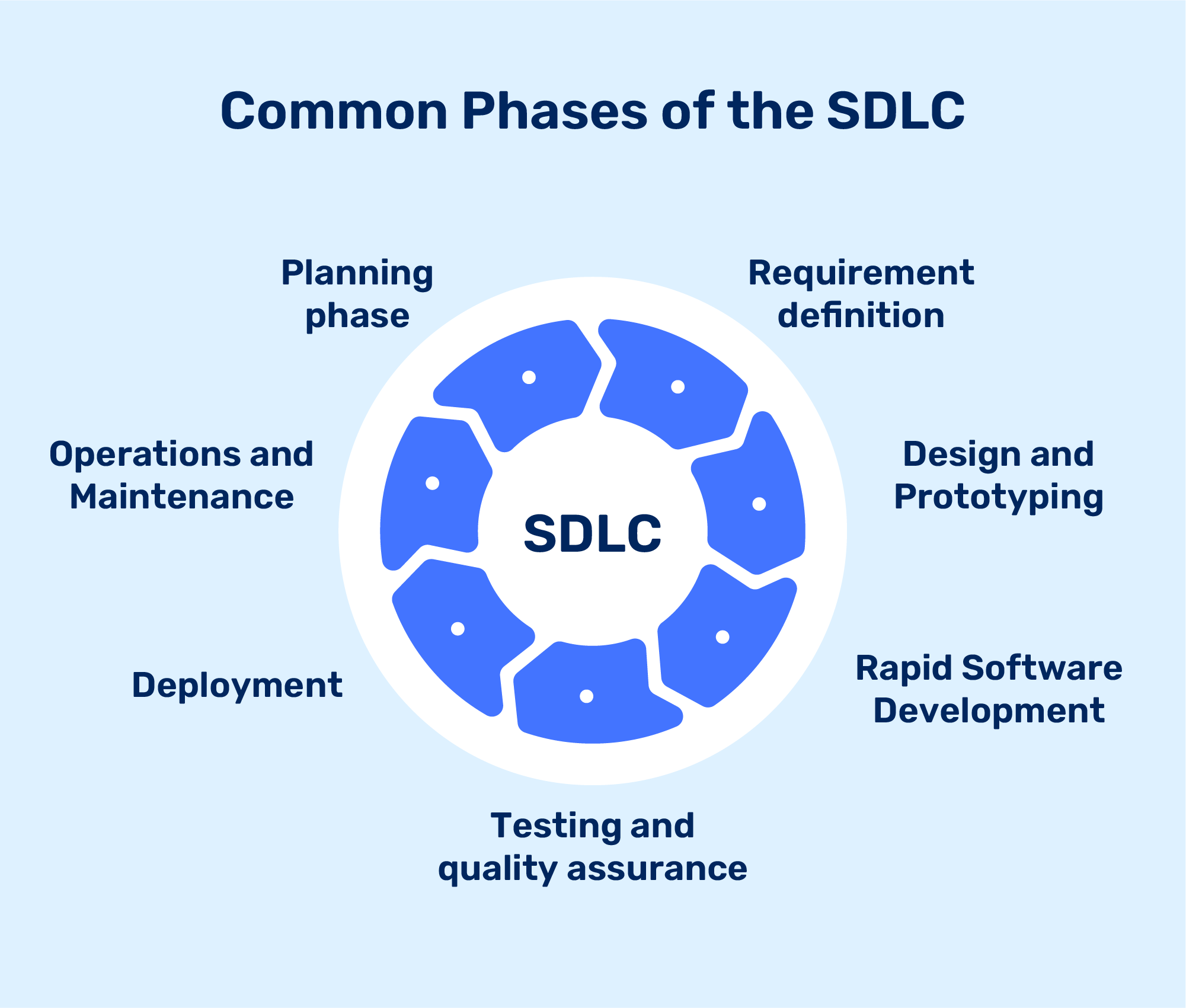

Understanding SDLC

The Software Development Life Cycle represents the systematic approach to software creation and maintenance. It begins with planning, where requirements are gathered and project scope is defined. This is followed by the implementation phase, where developers bring the planned features to life through code. Once the implementation is complete, the software undergoes thorough testing to ensure quality and eliminate bugs. After passing quality assurance, the software moves to deployment, where it's released to the production environment. The final stage, maintenance, involves ongoing support and updates to keep the software running efficiently.

DevOps: The Integration Layer

DevOps represents a revolutionary approach to software development and operations. Rather than treating development and operations as separate entities, DevOps serves as a bridge between these traditionally vaulted teams. This integration enables seamless communication and collaboration throughout the entire software development life cycle. By bringing these teams together, DevOps facilitates faster deployment, more reliable updates, and better overall system stability.

Operating System: The Foundation

The operating system serves as the crucial intermediary between applications and hardware components. This intermediary role is essential because applications cannot directly interact with hardware components such as CPU, memory, storage, and I/O devices. The OS effectively acts as a translator, managing resources and ensuring smooth communication between software and hardware layers.

Resource Management

Resource management is one of the OS's primary functions. The operating system handles CPU allocation, ensuring that multiple processes can run efficiently even on single-core processors through rapid task switching. Memory management involves allocating RAM to different applications and optimizing usage through swapping when resources are limited. The OS also manages file systems, organizing data in structured ways - typically using a tree system in Unix-based operating systems or multiple root folders in Windows.

Kernel Architecture

The kernel represents the core of any operating system, serving as the foundation for all system operations. It handles critical tasks such as:

Process Management

Memory Allocation

Device Driver Integration

System Security

Hardware Communication

There are three main types of kernels, each with its own approach to system management. Monolithic kernels, like Linux, run the entire OS in kernel mode. Microkernels maintain minimal functionality in the kernel itself, pushing other services to user space. Hybrid kernels, such as Windows NT, combine elements of both approaches.

Key Technical Insights

Process isolation represents one of the most important concepts in modern operating systems. Each process operates in its own protected space, preventing interference between applications and enhancing system stability. In modern multi-core systems, true parallel processing becomes possible, with different cores handling separate processes simultaneously.

Memory management in modern operating systems is highly sophisticated. The OS constantly monitors and optimizes memory usage, reallocating resources as needed between active and inactive applications. This dynamic management ensures efficient resource utilization while maintaining system performance.

Conclusion

Understanding these fundamental concepts is essential for any DevOps practitioner. They provide the foundation for making informed decisions about system architecture, performance optimization, and security implementation. As we continue this learning journey, these concepts will repeatedly surface in practical applications and tool usage.